What I learned building my first AI-powered app

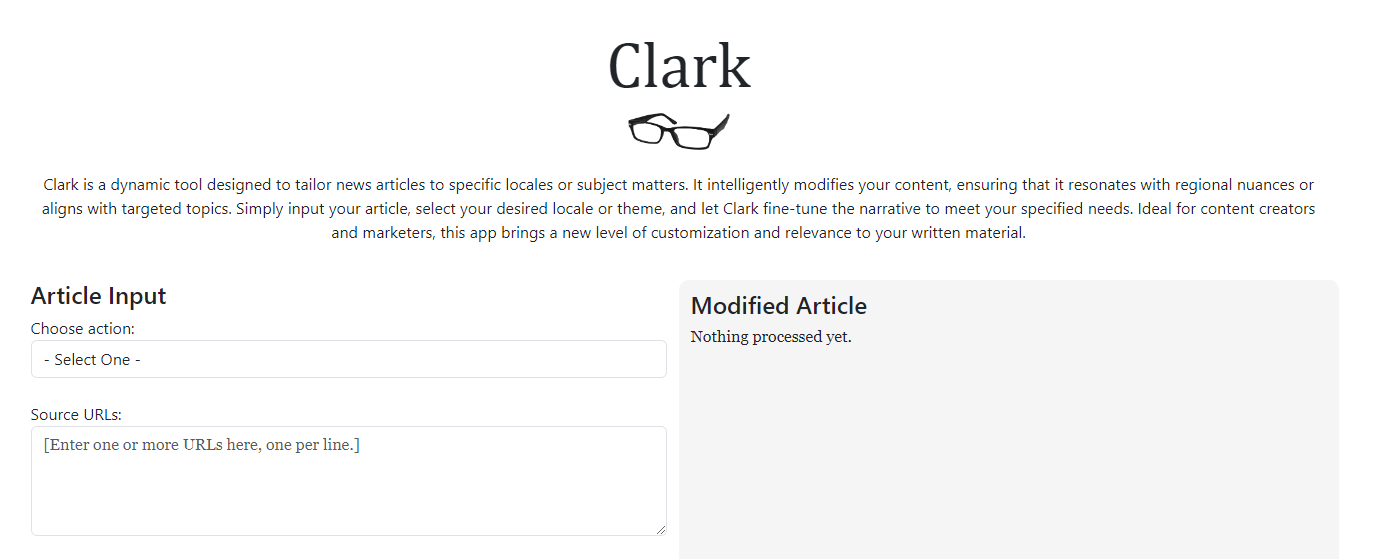

Like everyone who opened up Twitter/X at the end of November 2022, I saw a very short tweet from Sam Altman about a new chat bot he released to the public that day. "Big deal", I thought. Chat bots were commonplace then, and they all stank (stunk? Note to self: ask AI about this…).But I used it. At first, I’ll admit that I brought an all-or-nothing attitude. This was either going to be Skynet, or Yet Another Chat Bot. Its simple, unassuming user interface certainly didn’t wow me at first, but in retrospect it had echoes of Google’s own very simple (and famously successful) UI.Soon after the initial fascination wore off, I started looking at the developer docs to see what this could be capable of at scale. At the time, I was doing lots of research about cold outreach, trying to figure out what works and what doesn’t. The chief principle to stick too, it seems, is personalization. It’s better to send 10 ultra-personalized emails per day than it is to send 100 generic ones. So you have a classic trade-off between approaches, which immediately triggers the question “Wait, could I have both?”.It turns out you can. I built a simple POC that takes a name and an email address, and with that, produces a genuinely personalized email in about 15 seconds. However cool I thought this idea was, it turns out it had been done - I had accidentally invented Salesforces Einstein Prompt Builder for Sales Cloud.A short while later, with the help of an experienced news media exec who really knows the space, I supported a fellowship team from the London School of Economics’ JournalismAI program by implementing Clark, a simple web app that uses AI to help newsrooms make articles more relevant to their audiences. This was my deepest dive into building something with AI, and the experience was pretty educational.Clark, an AI powered app concept for journalists and news rooms

Importantly, I learned what an LLM is. Accepting it as a sort of linguistic Mechanical Turk was unsatisfying, so watching Andrej Karpathy’s incredible video really helped me see under the hood. I learned what many of us know now, that LLMs are next word prediction machines made of two primary parts: its parameters, or it pre-trained parametric knowledge (or “the model”), and the executable that runs inference on that model. The model itself takes weeks or months and a few million dollars of compute to train, but inference on that model (interacting with it) is really cheap. So cheap in fact, that OpenAI lets you use Chat GPT for free.It also turns out that the LLM’s context window is really important. I know this is an obvious point, but it impacted how I built Clark. The challenge was that I needed to feed the model with lots and lots of text in order to modify the final article. For this, I had to use two techniques: a rudimentary RAG implementation, and some recursive prompting.A very basic explanation of RAG (Retrieval Augmented Generation) goes something like this: Go and get contextual data and insert it into the prompt, so you don’t need to rely on pre-trained data alone. The additional data can come from anywhere - copy/pasted text from something you found online, (responsibly) scraped data from a website , or something you’ve stored in a vector database. I opted for the second approach.Now that I had access to contextual data thanks to RAG, I had to process it in steps. Enter recursive prompting - I took source data from the web and fed it into GPT4, getting an accurate summary back. Accurate is the key word, and massaging the prompts to get it right took some time and testing. With that in hand, I could feed the LLM-generated summaries back into GPT4, along with my next prompt, to get the outputs I was looking for. Each further prompt in my recursive chain required roughly 2x the testing of the prompt before it, so this part was time consuming. Finally, I realized that the current version of AI we’re using (at least at the time of this keystroke) isn’t quite “intelligence” yet, at least not in the way the average person thinks of it. It’s not going to architect and design the app I’m looking for yet. But for fun, I prompted GPT4 to do just that - design the app for me, and tell me what components I’ll need to build. The answer was pretty off base. It had me building out technologies that would be way to laborious, and an implementation that would be hard to maintain over time. Instead (and unsurprisingly), GPT4 was really good at things the smaller those things got. LLMs are a little bit like this generation’s graphing calculator - prohibited by some, required by others, and always helpful. In junior high math they were forbidden, but by high school they were indispensable tools listed on the syllabus. Maybe that’s what will happen in the professional world, if only this calculator would hold steady long enough to be understood.